The grid is planning for data centers that will never exist: Aligning transmission investment with development reality

Authors: Alex Oort Alonso, Noah Sandgren, Tony Wagler

Collaborators: Kyle Baranko, Gabrielle Stein

Executive summary

As data center load growth accelerates toward 80 GW by 2030, the U.S. grid faces an unprecedented planning challenge. This whitepaper introduces a land-first transmission planning framework that moves beyond speculative interconnection requests, integrating buildable land, permitting, and grid data to identify power-ready and power-constrained regions across Texas. Using ERCOT as a case study, we reveal where targeted grid upgrades can unlock gigawatts of stranded potential, offering planners, developers, and policymakers a faster, data-driven path to aligning grid investment with real-world development feasibility.

Introduction

Transmission planning has never been more necessary. It has also never encountered so much uncertainty.

Data center load growth is expected to reach 80 GW by 20301, accounting for up to 12% of total U.S. electricity demand2. Our current grid is not built to handle this level of demand, which in turn creates a massive need for transmission planning and buildout in the coming decade.

This next decade of electrification will be defined by concentrated, fast-moving demand from hyperscale and AI data centers. Unlike the diffuse, incremental growth of the past, today’s large loads arrive in gigawatt-scale steps where land, power, fiber, and other critical factors align.

Traditional planning methods that rely on coarse, top-down forecasts or react to one-off developer requests are not designed to account for this type of load growth. They overlook the practical realities that govern where projects can actually reach notice-to-proceed (NTP) quickly. The result is a planning process that risks overbuilding in the wrong places and under-serving the right ones, adding time, cost, and uncertainty for both utilities and developers.

This whitepaper introduces a holistic, location-aware blueprint that combines power flow modeling with the siting constraints and enablers that make or break real projects. The aim is to align grid upgrades with where development can actually occur, rather than relying on speculative interconnection requests or high-level growth curves.

We use ERCOT as our case study, given that it is one of the leaders in data center buildout, but we also call for similar approaches across other ISOs and regions.

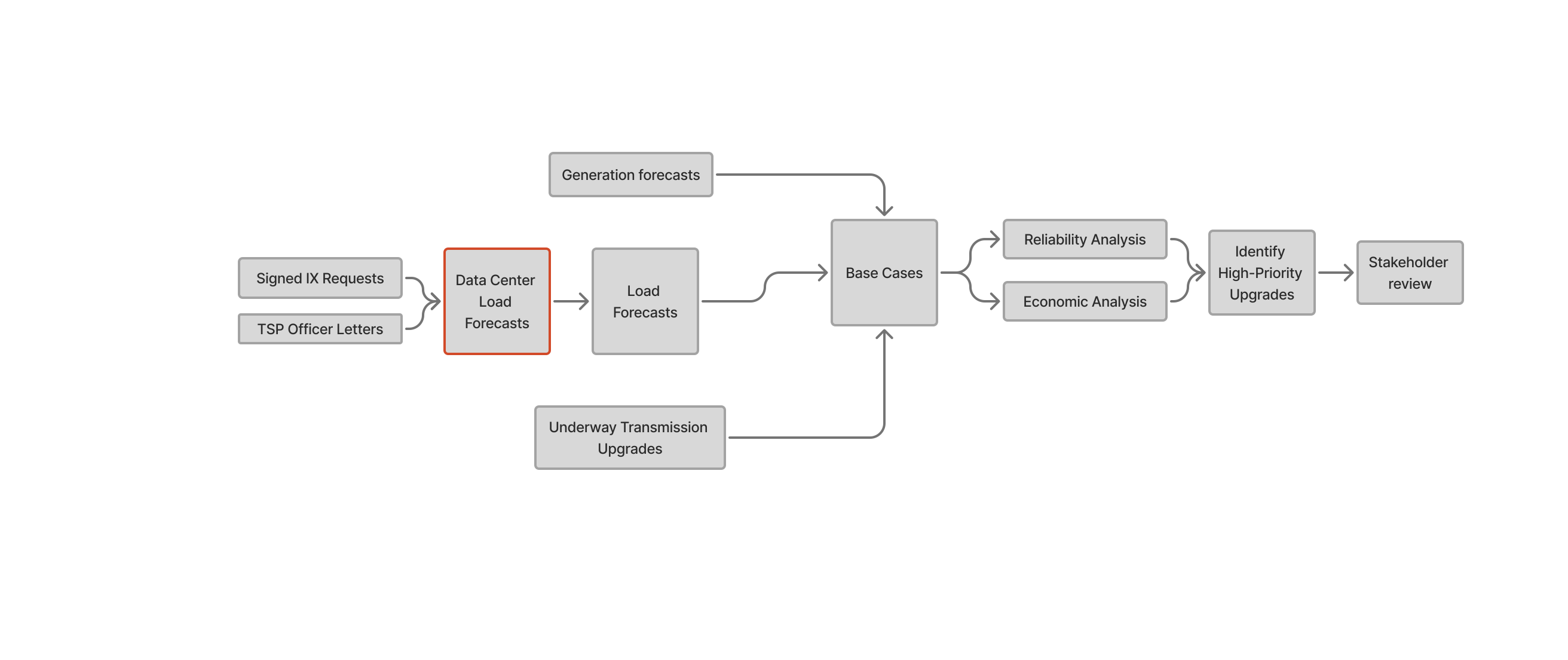

How transmission planning currently works in ERCOT

In Texas, transmission planning is led by ERCOT with extensive input from Transmission Service Providers (TSPs) and other stakeholders, primarily through the Regional Planning Group (RPG). The core analysis is the Regional Transmission Plan (RTP), a coordinated six-year reliability and economic study of the grid.

ERCOT builds planning base cases using models of generation, transmission facilities, and substation loads under normal and stressed conditions (e.g., summer peak). Load levels are anchored to bus-level submissions from TSPs and ERCOT’s weather-adjusted forecasts.

Candidate upgrades are screened and modeled for reliability, performance, and economic benefits. ERCOT then prioritizes projects and coordinates with TSPs and stakeholders through the RPG process to advance the highest-value solutions.

Why this load is different

Historically, load growth was fairly uniform, consisting of many small industrial additions, and precise project siting had limited systemwide consequences. That is no longer true. Individual data center campuses now regularly request >1 GW (roughly 2% of the average Texas load), making location a decisive factor for dispatch, flows, and upgrade needs.

If planning assumes a 1 GW load at Location A that then fails to materialize (a real risk amid speculative interconnection requests), the result is costly misallocation. In the most recent Regional Transmission Planning (RTP) report3, ERCOT explicitly flags this challenge:

“The location of [...] load is crucial in driving flow patterns and determining transmission needs. [...] the location of loads is less predictable, especially given the much shorter lead times for load interconnections. This adds complexity to transmission planning [...] Planning practices and policies must be reviewed to address these new challenges. ”

These risks are already beginning to manifest. In August 2025, Hays County rejected a rezoning request for a 200-acre data center near the Edwards Aquifer, citing water stress and existing drought restrictions. This same area, however, had recently seen major grid upgrades, including the reconductoring of a 345 kV line in 2024–2025 directly adjacent to the site, intended to support expected load that now may never materialize.

Therefore, there is a significant need to understand where data centers will actually be developed, and design and plan for future grids to align with that. To achieve this, we propose a bottom-up, data-driven approach to understanding what data center developers and operators most want.

Understanding key data center siting factors

For data center developers, speed-to-power (at scale) has become the defining priority, and this drives every siting decision. To achieve this, a site must possess several key characteristics to expedite the permitting, construction, and operation processes.

From our in-house development experience and expertise working with data center developers at Paces, a viable site should have:

- Buildable land free from environmental or permitting barriers

- Electric infrastructure with available power capacity

- Fiber connectivity

- Reasonable water access

- Natural gas access (for backup generation)

While available power is the ultimate constraint for large loads, the other elements are often just as decisive. An otherwise perfect site can be derailed by permitting conflicts or local opposition, even if gigawatts of capacity are located at the nearest point of interconnection (POI).

Our objective is to identify all land meeting these siting criteria and realistically developable for data centers. The planning challenge is not that developers lack site preferences, but that individual interconnection requests are inherently speculative and may not materialize. By modeling these constraints systematically, rather than relying on uncertain project-by-project requests, we generate a clearer picture of where aggregate load growth is likely to concentrate and where grid investment can most effectively enable that development.

A data driven framework for identifying power-constrained opportunities

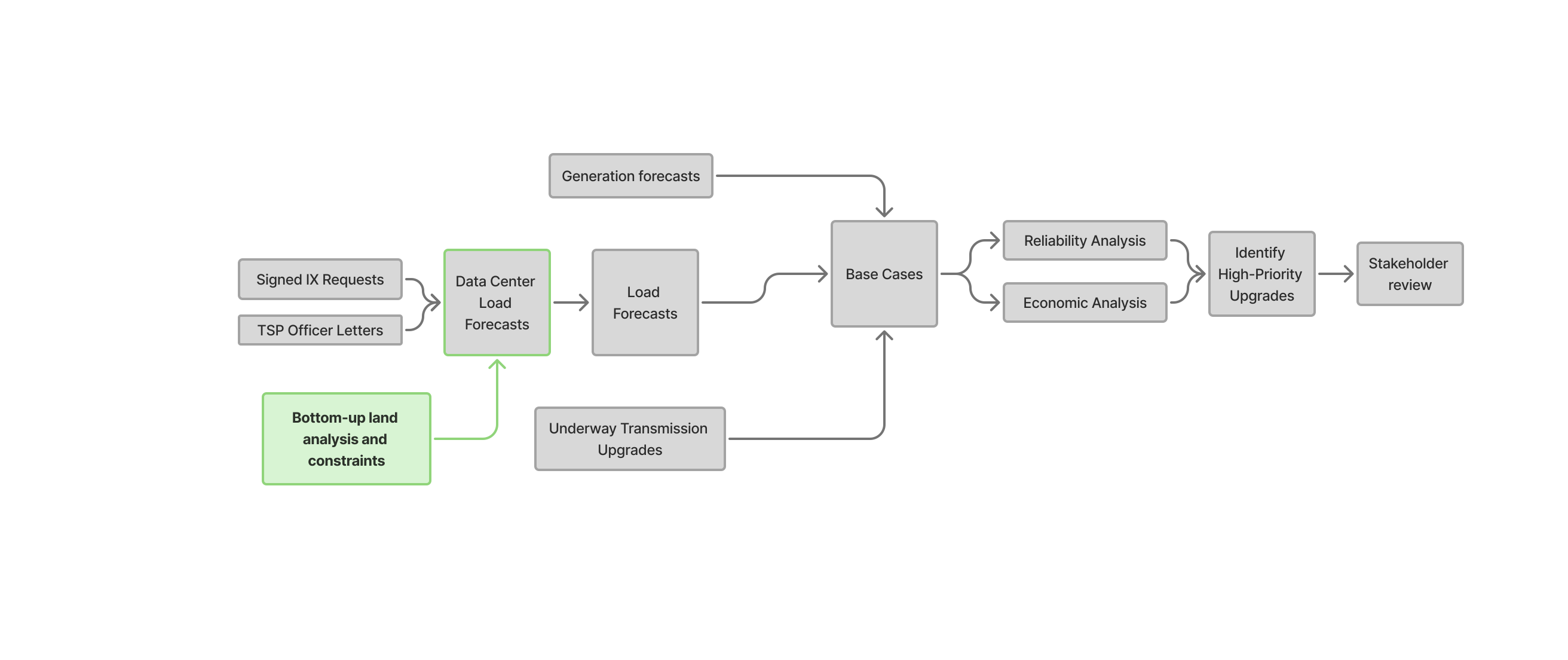

We propose a bottom-up, data-driven method that mirrors developer decision-making and converts it into planning-grade load signals.

In our analysis, we (1) identify where hyperscale sites can reach NTP fastest, (2) determine which of those locations are headroom-positive vs. headroom-limited, and (3) translate findings into bus-level loads and targeted upgrade candidates for standard reliability/economic studies.

We leverage Paces' comprehensive environmental and permitting datasets alongside our in-house power engineering team to map where development can credibly occur and how the grid can support it.

Step 1 – Mapping viable data center sites

We apply a developer-driven screen to every land parcel in Texas (13,855,465 parcels in total), according to the following criteria4:

Site characteristics

- Size: At least 100 acres of buildable land (for large-scale hyperscale development)

- Topography: Slope under 15°, outside wetlands and floodplains

- Permitting: Not within conservation easements; favorable zoning (or eligible for rezoning), minimum setback from residential areas

- Water: Exclude counties with drought restrictions5, add a 1 mi setback from sole source aquifers

- Ownership: Excludes public entities, religious organizations, country clubs, educational institutions, and public infrastructure

Infrastructure proximity

- Power: Within 3 miles of a 345 kV transmission substation and within 1 mile of a 345 kV transmission line

- Fiber: Within 10 miles of a fiber optic cable

- Gas: Within 5 miles of a natural gas pipeline with a diameter ≥12"

This yields candidate sites a developer could credibly take forward, independent of current grid headroom.

Step 2 - Assessing grid headroom and identifying upgrade pockets

With a list of developable, fiber and gas-adjacent land, we next asked two questions:

1. Where is the grid best suited to serve new data-center withdrawals today

2. Where does the same bottleneck block multiple promising sites (that would be unlocked by a targeted grid upgrade)

Using ERCOT’s 2028 summer-peak planning case, we evaluate every 345 kV bus as a prospective POI. For each POI, we gradually increase the amount of power withdrawn at that bus while proportionally adjusting nearby generation to supply it. The result for each bus is the maximum additional load that the grid can support before any line or transformer exceeds its rating under normal or contingency (N-1) conditions.6 This yields, per bus:

- Withdrawal Capacity (MW) - The maximum amount of load before overloading the first element

- Limiting Element - The transmission line or transformer that is overloaded first

Positive withdrawal capacity indicates available headroom; negative values indicate that the bus worsens a pre-existing overload (i.e., the headroom of those elements has already been exceeded before any withdrawal). The magnitude of a negative value reflects how severely constrained the location already is.

We associate each viable parcel (Step 1) with its nearest 345 kV substation/bus and aggregate to the POI level. This produces a clear view of where developable acreage coincides with available headroom, indicating locations best suited for hosting near-term load.

For transmission planning, we want to identify where existing constraints most limit developable sites and which upgrades would release the most capacity.7 We group POIs and their surrounding viable land by their limiting element to highlight high-leverage bottlenecks, where a single upgrade could unlock multiple ready-to-build sites. We estimate each upgrade’s impact by excluding the element responsible for the first binding constraint and measuring the change in withdrawal capacity relative to the pre-upgraded case. The resulting increase in capacity represents the additional load enabled by the upgrade.

Results: viable sites, headroom capacity, and upgrade priorities across Texas

Viable site distribution

Ignoring headroom capacity, we get the following distribution of land that is suitable for fast data center development, meeting the criteria above. The land is clustered around 345 kV lines and subs, and is highly concentrated in Northwest and Southeast Texas.

There is a total of 765,000 acres of land suitable for data center development, which, assuming 1 acre can support 1 MW of data center, would equate to 765 GW of data center load growth. This shows that total land availability isn’t the real constraint; what matters is viable land near transmission infrastructure with available power.

Interestingly, we find that this land is heavily clustered around a handful of POIs. Only ~230 substations across the entire state of Texas have suitable data center land around them (within 3 mi), and the top 10% of substations hold >30% of the total suitable land. For this indicative analysis, we intentionally focused on substations as connection points, recognizing that transmission lines between substations would offer additional line tap opportunities in a more detailed study. Even when we only consider substations as POIs, our methodology still significantly reduces the number of locations to consider for planning.

Withdrawal capacity distribution

By overlaying our power flow withdrawal analysis on the set of viable data center sites, we can estimate which sites would be best served by today’s grid. Only about ~17% of substations near these sites have positive withdrawal capacity, meaning they can support new load without exacerbating pre-existing overloads.

These substations cluster mainly in Northwest and Central Texas. They represent the areas most immediately ready to accommodate data center growth, likely to be absorbed by developers in the near term.

When we compare these results with data centers currently planned or under construction (see map below), we see a strong overlap, reinforcing the validity of our bottom-up approach and its reflection of real-world siting patterns. For example, OpenAI x Crusoe’s Stargate Project, falls within the high-capacity window identified near Abilene.

The rest of the data center viable land shown in Figure 3 is essentially ‘stranded’ due to a lack of headroom in nearby buses.

Determining upgrade hotspots

To target upgrades, we trace each headroom-constrained site back to its limiting element (the first overloaded branch at the transfer level). Aggregating constrained acreage by limiting element reveals that a small number of bottlenecks dominate: just five overloaded elements collectively cap withdrawal capacity for ~250,000 acres of viable land (30% of total viable data center land). The largest clusters tied to single elements appear in Northwest Texas around Abilene, where one fix can unlock many neighboring sites.

This creates a concrete signal for planners and developers: focus upgrades on the few elements constraining the largest aggregated acreage. By starting from end-use feasibility (developable land near POIs) and working backward through the limiting elements, we narrow the set of potential projects to a short list of high-leverage upgrades, materially reducing planning uncertainty and accelerating where data center load growth can actually land.

To quantify which upgrades would be able to unlock the most land, we calculate the difference in withdrawal capacity at a given POI if that limiting element is no longer constrained. Plotting the results below, we find that large amounts of capacity in the Abilene and Texas triangle area could be unlocked through singular upgrades.

While some of these grid constraints are already being addressed, many are not. This analysis pinpoints exactly where the next wave of strategic upgrades should occur to unlock the largest share of viable land. For data center developers, it also serves as a forward-looking signal of where capacity is most likely to emerge, allowing projects and transmission planning to move in coordination, guided by bottom-up constraints.

Implications for grid planners and developers

With the increasing noise around data center load growth and speculative requests, transmission planning is becoming increasingly uncertain. With GW-scale additions on the line, the cost of overbuilding in one area is becoming prohibitively expensive. To address this, we propose a bottom-up, land-centered approach that begins with the total buildable and developable land, with a pathway to permitting for data centers, and works backwards to identify the transmission constraints that most limit those sites.

Our ERCOT case study demonstrates this in practice. We screened every parcel in Texas against developer-driven criteria and identified roughly 765,000 acres of land suitable for fast hyperscale development. That opportunity is highly concentrated: only about 230 substations are situated near this viable land. When we overlaid power-flow results, only ~17% of nearby substations showed positive withdrawal headroom today; the rest of the data center eligible land is effectively “stranded” by existing constraints. A small set of recurring overloaded elements accounts for the majority of the stranded opportunity, indicating that targeted upgrades, not scattered fixes, can unlock multiple clusters of developable land at once.

For data center developers, this framework offers a roadmap to de-risk site selection and accelerate deployment. Rather than pursuing sites based solely on available land or speculative grid capacity, developers can identify locations where all constraints align: developable parcels, permitting feasibility, and either existing transmission headroom or high-probability near-term upgrades. Sites in our positive-headroom zones offer the fastest path to commercial operation. For headroom-constrained sites, developers gain visibility into which specific transmission bottlenecks block their target areas and can coordinate early with TSPs on upgrade timing or evaluate alternative approaches such as behind-the-meter configurations. This visibility transforms site selection from a reactive queue position to a strategic choice based on realistic speed-to-power timelines.

Grid investment must be anchored in development reality, not speculation. While this paper focuses on transmission planning, the framework addresses a coordination failure affecting all stakeholders. ISOs need location-specific load forecasts to justify infrastructure investment. Developers need certainty about speed-to-power to commit capital. Regulators need to avoid stranded assets that burden ratepayers. Constraint-informed planning solves all three: it grounds grid investment in development constraints, ensuring transmission capacity lands where projects actually get built.

Footnotes:

- https://www.mckinsey.com/industries/private-capital/our-insights/how-data-centers-and-the-energy-sector-can-sate-ais-hunger-for-power

- https://escholarship.org/uc/item/32d6m0d1

- https://www.ercot.com/gridinfo/planning

- These parameters reflect typical data center requirements but can be adjusted based on individual developer priorities and project-specific constraints.

- This was based on Public Water Systems in Texas with current mandates to reduce water consumption to avoid shortages.

- Results are derived from ERCOT’s 2028 SUMMER SSWG planning case using full AC N-1 criteria and the ERCOT’s official contingency and monitoring sets. For each candidate bus, MW withdrawal at that bus is incrementally increased and generation in the study area is proportionally redispatched to serve the added load. Per-bus transfer limits are the minimum AC-verified transfer at which any monitored element first exceeds its thermal rating under system intact or post-contingency conditions.

- Our analysis models pure grid-connected data center load to establish baseline transmission requirements. We acknowledge that behind-the-meter configurations with co-located solar and storage, which we have previously analyzed, can significantly reduce net transmission withdrawal in favorable locations. While this may moderate demand in high solar resource areas with available land, most deployments will remain grid-connected, particularly for inference loads that require urban proximity and grid reliability. Our constraint analysis identifies where transmission capacity is needed, with the understanding that BTM adoption could reduce actual demand in specific regions below our grid-served baseline.